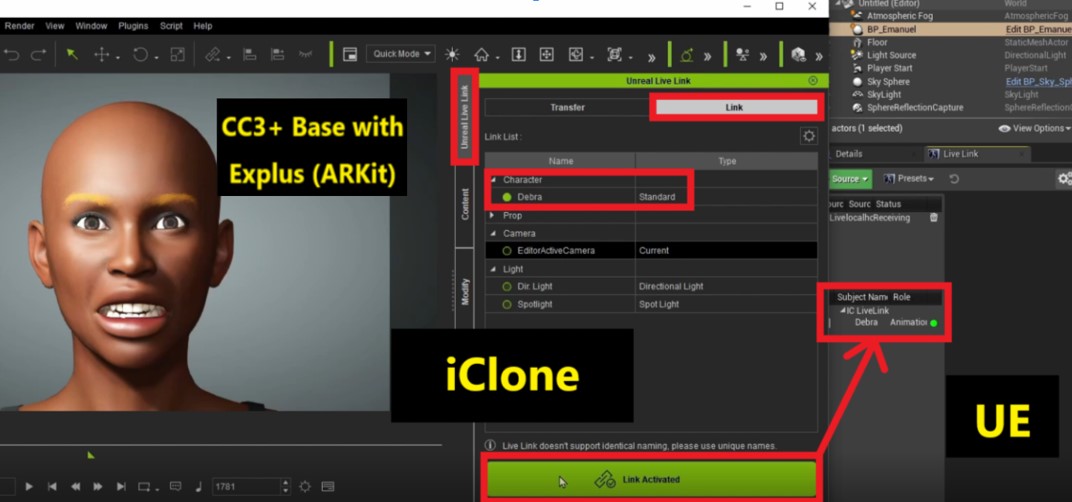

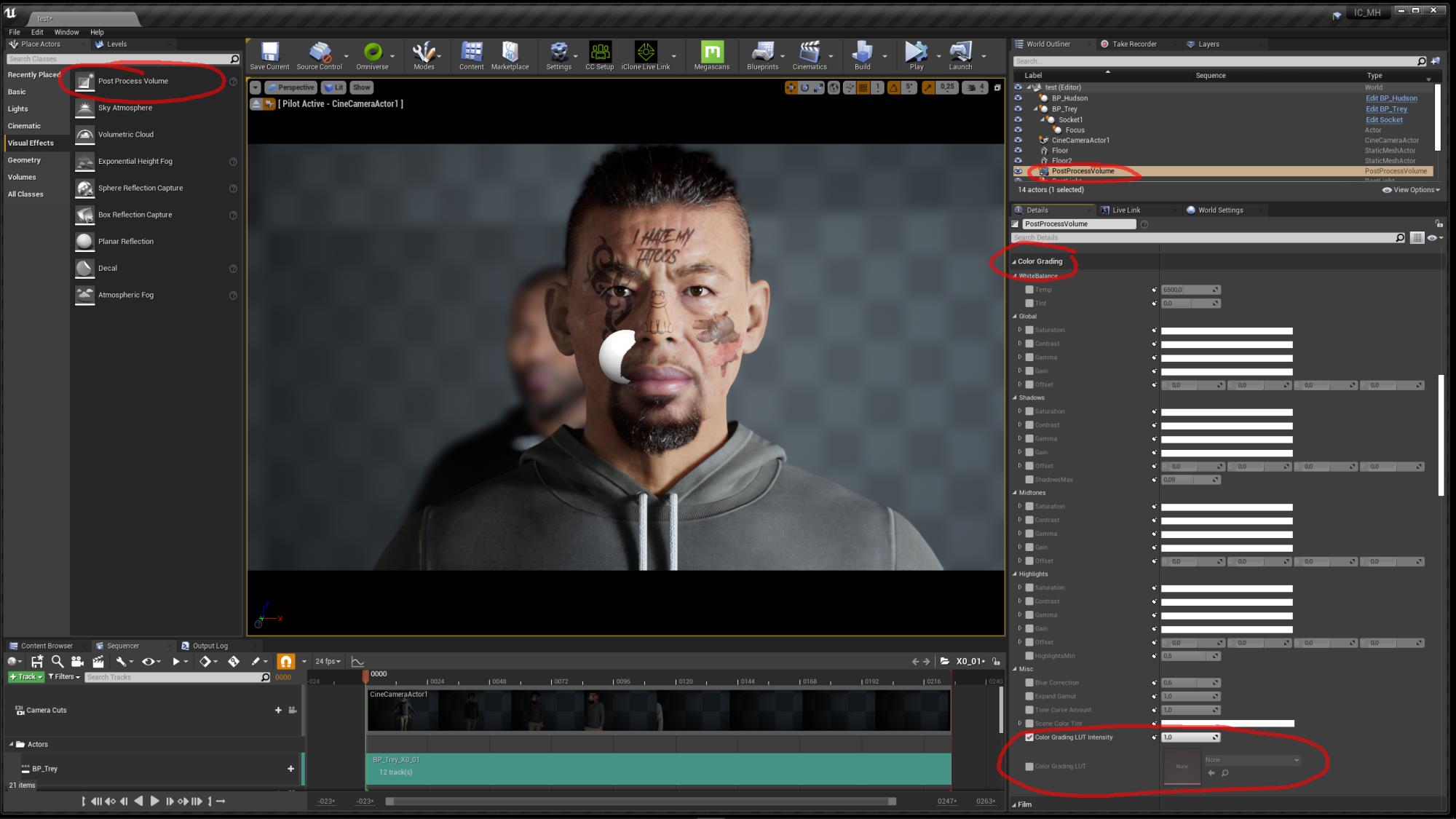

This begins recording the performance on the iPhone, and also launches Take Recorder in the Unreal Editor to begin recording the animation data on the character in the engine. When you're ready to record a performance, tap the red Record button in the Live Link Face app. In the Details panel, ensure that the Update Animation in Editor setting in the Skeletal Mesh category is enabled.īack in Live Link Face, point your phone's camera at your face until the app recognizes your face and begins tracking your facial movements.Īt this point, you should see the character in the Unreal Editor begin to move its face to match yours in real time. In your character's animation graph, find the Live Link Pose node and set its subject to the one that represents your iPhone.Ĭompile and Save the animation Blueprint. You should now see your iPhone listed as a subject. In the Unreal Editor, open the Live Link panel by selecting Window > Live Link from the main menu. See also the Working with Multiple Users section below.įor details on all the other settings available for the Live Link Face app, see the sections below.

If you need to broadcast your animations to multiple Unreal Editor instances, you can enter multiple IP addresses here.

You'll typically need to do this in a third-party rigging and animation tool, such as Autodesk Maya, then import the character into Unreal Engine.įor a list of the blend shapes your character will need to support, see the Apple ARKit documentation. You need to have a character set up with a set of blend shapes that match the facial blend shapes produced by ARKit's facial recognition. These capabilities are typically included in iPhone X and later.įollow the instructions in this section to set up your Unreal Engine Project, connect the Live Link Face app, and apply the data being recorded by the app to a 3D character.Įnable the following Plugins for your Project: You'll need to have a model of iPhone that has both a depth camera and ARKit. You'll have best results if you're already familiar with the following material: The material on this page refers to several different tools and functional areas of Unreal Engine.

0 kommentar(er)

0 kommentar(er)